International DevOps Certification Academy™

How Do You Enable Low Risk DevOps Code Deployments In Your Production?

In many IT organizations you can observe, production deployments are cumbersome and stressful. To stay in peace this results to a tendency to reduce frequency of production deployments as much as it is possible. Then organizations inevitably face deployments with larger batch sizes which cause even larger problems. This vicious destructive cycle gets only worse and it unfortunately represents status of most of the largest organizations including the ones whose core businesses are software and technology.

Your DevOps Team Has Built-In Control and Risk Mitigation Mechanisms

In a typical IT organization, development team builds software and operations team takes care of its deployment. In contrast, DevOps methodology shifts reliance of control and risk mitigation mechanisms from other independent teams to your own self-sufficient and competent team. To automated deployment and peer review processes, again within your own team.

As all production and non-environments are assets which are as important as software your DevOps team produces, your DevOps team embraces these three important principles:

- Ensure consistency of all environments in terms of operating systems, components, interfaces, patch levels, all other dependencies and of course your own software and configurations.

- It is a priority number one activity to fix an impediment which breaks consistency of your environments.

- Deploy with the same techniques to all environments, so rehearsal of production deployments become one of your daily tasks and habits.

Your Built-In Self-Service Deployment Mechanism

Your automated self-service deployment mechanism follows the following pattern.

- Code and check-in to trunk.

- Build deployable packages.

- Validate deployable packages, dependencies and configuration readiness.

- Validate environment readiness.

- Run automated testing.

- Record all validation and automated test results for audit and compliance reasons.

- Deploy packages to target environment.

- Run automated tests in target environment to validate deployment.

- Monitor system, performance and activity metrics of target environment.

- Provide fast feedback, correct or roll-back the deployment if anything goes wrong.

Your Deployments Are NOT Identical To Your Releases

With automated self-service mechanism to deploy your code to non-production and production environments, your DevOps team is now empowered and enabled to do daily deployments to your systems. Because every single deployment cannot be categorized as a feature or benefit release for your clients your team is serving for, your DevOps team needs to architect your applications and/or your environments in a way that releases do not require code changes and further associated deployments.

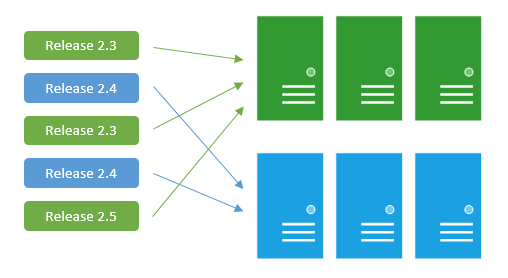

Blue-Green Deployment Pattern

One of the challenges with automating deployment is the cut-over itself, taking software from the final stage of testing to live production. You usually need to do this quickly in order to minimize downtime. The blue-green deployment approach does this by ensuring you have two production environments, as identical as possible. At any time one of them, let's say blue for the example, is live. As you prepare a new release of your software you do your final stage of testing in the green environment. Once the software is working in the green environment, you switch the router so that all incoming requests go to the green environment - the blue one is now idle.

Blue-Green Deployment Pattern

(Source: Octopus Deploy)

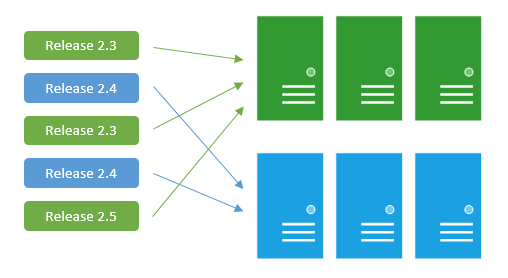

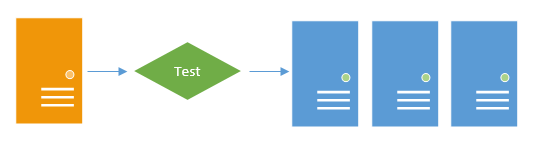

Canary Deployment Pattern

(aka The Dark Launch)

Canaries were once regularly used in coal mining as an early warning system. Toxic gases such as methane or carbon dioxide in the mine would kill the bird before affecting the miners. Signs of distress from the bird indicated to the miners that conditions were unsafe.

Inspired from canaries in mining industry, Canary deployment is a pattern for rolling out releases to a subset of users or servers. The idea is to first deploy the change to a small subset of servers, test it, and then roll the change out to the rest of the servers. The canary deployment serves as an early warning indicator with less impact on downtime: if the canary deployment fails, the rest of the servers aren't impacted.

Canary Deployment Pattern

(Source: Octopus Deploy)

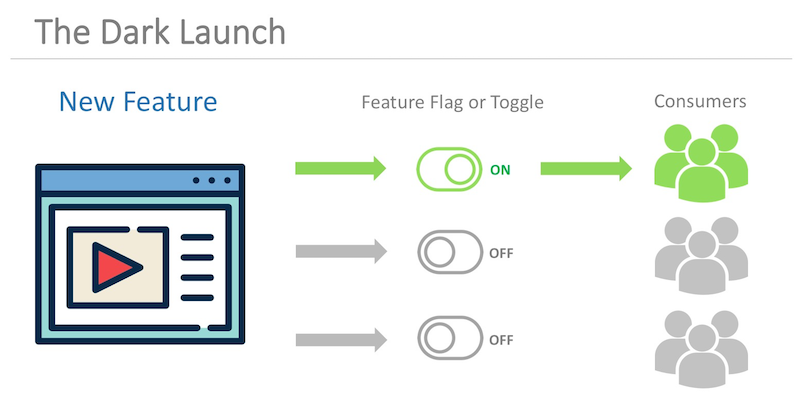

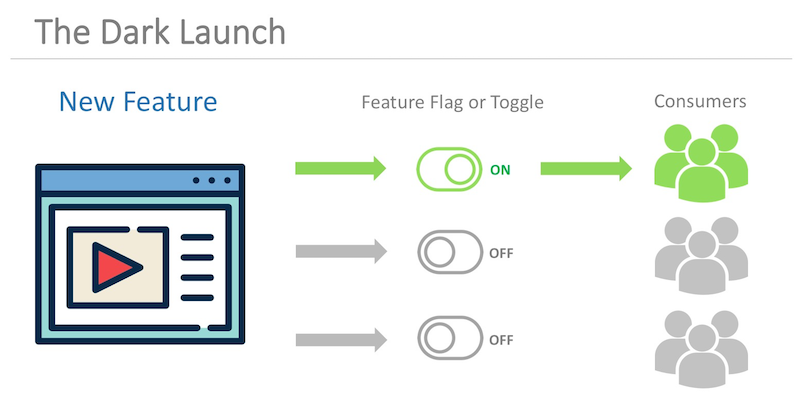

Facebook and Google, along with many leading tech giants, use canary deployment pattern called “The Dark Launch”. They gradually release and test new features to a small set of their users before releasing to everyone. This lets them see if you love it or hate it and assess how it impacts their system’s performance. Facebook calls their dark launching tool “Gatekeeper” because it controls consumer access to each new feature.

The Dark Launch

(Source: TechCo Media)

It is called a dark launch because these feature launches are typically not publicized, but rather, they are stealthily rolled out to 1 percent, then 5 percent, then 30 percent of users and so on. Sometimes, a new feature will dark launch for a few days and then you will never see it again. Likely, this is because it did not perform well or the company just wanted to get some initial feedback to guide development.

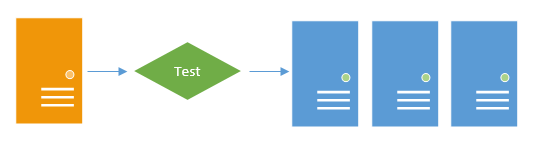

Cluster Immune System Release Pattern

This release pattern is an extension of canary deployment pattern. It requires performance and software activity monitoring systems tightly integrated with your release process.

In the canary deployment pattern depicted above, once the initial deployment on orange colored server is done, your monitoring systems record all system, performance and software activity metrics and generate early alerts if problems increase over certain thresholds. This results in automated roll-back of installed code from orange colored server.

If automated tests on orange colored server and monitoring system provide positive outcomes, your DevOps team is now far more confident to deploy their code to all other blue colored server clusters too. In summary, Cluster Immune System Release Pattern offers for your DevOps team:

- Additional safeguard for the issues that could be missed by test automation.

- Quick feedback and automated roll-back action for production issues.

Feature Toggles

With feature toggles you can switch on and off features of your application. Therefore, once all codes required for a certain feature are deployed in your production system, release of this feature is nothing but switching on your toggle. Feature toggles are usually settings in runtime system configuration files or system configuration databases.

Thanks to Feature Toggles, your DevOps team is now able to switch off a feature if a canary deployment results in errors or suboptimal user experience. With feature toggles, you also have the ability to disable resource intensive, but relatively less important features if your system has challenges to scale. Therefore, even tough your system may have issues to scale with all of its features, you can still enable your clients to get the best throughput from the most critical features of your software. As an example, in an e-commerce portal, you can temporarily switch off the toggle of viewing invoices, so your checkout flow has more CPU and memory resources to consume.

Furthermore, in a service oriented architecture, your DevOps teams can deploy different versions of services without switching them on. Once all new versions of services required for a new feature or service flow are deployed, you can switch on toggles of these services to enable the new service flow.

Architect for Safer Releases

There is no perfect one size fits all architecture for all software products and services in all scales.

When IT platform of a startup organization is initially built, a monolithic architecture is most of the times the first choice to ensure the quickest and cheapest entry to the market and to validate the business case. The problem with monolithic architectures is that functionally distinguishable aspects (core functions, supplementary functions, user and systems interfaces and integrations) are all interwoven, rather than containing architecturally separate components. During the lifespan of many large organizations including Amazon, Google, Facebook and Ebay, they need to abandon their monolithic architectures in order to rapidly scale and enable low risk releases and expansions of their features they serve for their clients. And their next direction was service (or micro-service) oriented architectures with the adoption of Strangler Application Pattern.

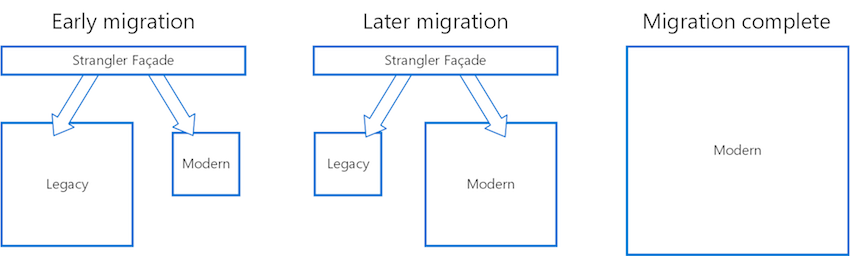

Strangler Application Pattern To Enable Low Risk Migrations to Micro-Services

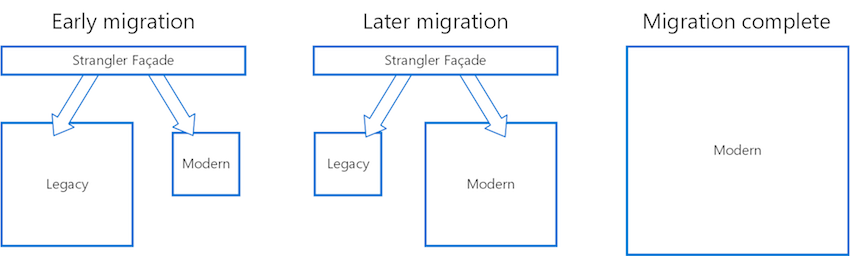

Completely replacing your complex system can be a huge undertaking. Often, you will need a gradual migration to a new system, while keeping the old system to handle features that haven't been migrated yet. However, running two separate versions of an application means that clients have to know where particular features are located. Every time a feature or service is migrated, clients need to be updated to point to the new location.

Strangler Application Pattern incrementally replaces specific pieces of functionality with new applications and services. Create a façade that intercepts requests going to the backend legacy system. The façade routes these requests either to the legacy application or the new services. Existing features can be migrated to the new system gradually, and consumers can continue using the same interface, unaware that any migration has taken place.

Ebay is one of the first organizations which have used Strangler Application Pattern to migrate their legacy monolithic architecture into micro-services. They started their migration by merely putting their applications behind well defined universal APIs in Ebay ecosystem, so they didn’t have to rewrite all existing code in one single migration attempt. They reorganized their engineering groups with small teams from 6 to 10 engineers. A team as big as 10 people is now able to handle universal API for the auction platform which is the most frequently used auction API in the world.

Strangler Application Pattern

(Source: Microsoft Azure Design Patterns)

CONCLUSION

In most of the IT departments, teams are not held responsible for building future-proof, scalable and safer deployment patterns and their associated architectures. And yet, building an architecture and application features which enable your teams to do faster and safer deployments is a major prerequisite to succeed with DevOps.

Only by enabling your teams to do low risk, faster and daily deployments, you and your teams can reap the benefits of continuous deployment and smooth releases.

DEVOPS-CERTIFICATION.ORG

DEVOPS-CERTIFICATION.ORG